Site Links

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Quick Links

Categories

phase shift issues and thoughts

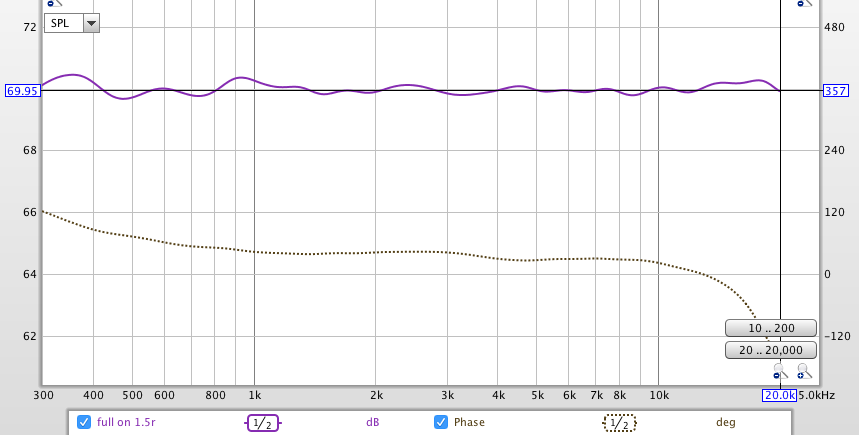

(attached pic) Phase shift with a broad range driver w/high-shelf eq (for mid and high freq duty)

While phase affects signal timing relative to original signal, it’s not relative to a displaced driver over its frequency range, so thinking indiscernible by listening (as its not destructive to another, there is no other).

Both systems (broad range and multi-drivers with crossovers) exhibit constructive and destructive interference. The former as a result of frequency wavelength relative to driver circumference, and the latter by driver separation (with its axes of nulls determined by crossover configuration).

So ‘its always somethin’…

It’s how we each set out our project’s goals and balance our perceived results. And sometimes the best offense is a great defense (i.e. attenuating the negatives).

So I’m pondering the affects of removing low frequency information from the higher frequencies (via a crossover) and playing them through independent drivers... I.e. thinking of a single membrane microphone recording the complex music signal, being a net composition of small waves on bigger waves. Does not removing the small waves without reference to the larger reference waves (as recorded at the microphone) cause added phase issues? (Sorry, kinda hard to describe)

Thoughts?

Comments

So, isn't some of the small wave's reference (as recorded) now missing?

And in the example article, its adding two phased out signals, somewhat different than a single comprehensive music recording.

True yes, and each instrument / voice has a broad multi frequency dynamic range… but so as not to add layer upon layer, to see the forest but for the trees (focusing on the issue of lack of continuity of signals via separating frequency presentation through displaced drivers) I simplified as a single mic recording. And further unique issues in playback where speakers may be slicing and dicing among 2, 3 or more drivers (but its the smaller frequencies into the 1 to 5kHz range, and up, that potentially are most problematic).

Sorry, not so easy for me to explain in writing (I'm a visual)

Ha, if only.

Unfortunately the mirror doesn’t let me forget I’m getting older, for the rest I can find solutions...