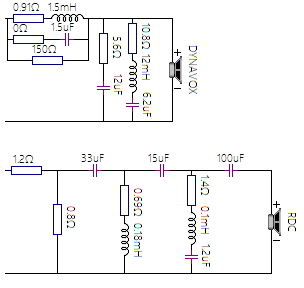

Here's mine.. Ben aptly pointed out that the pad is eating a good bit (a ton) of unnecessary power. And in my defense this is one of the first passives I've built.. but something to take note if anyone ever tries to replicate this design. Try shifting the shunt resistor back a cap or two; I didn't have time to play around with it ever since I got back. But it can surely be done while retaining the general voicing, and preventing a catastrophic meltdown.

Here's the response curves- acoustical Xover point is squarely 1.5khz, LR4 both ways. Electrically the tweeter is a textbook LR4, woofer is simply.. a parallel notch (series w/ tweeter). The Le is so high I don't really need anything else up there.

It's a fairly scandalizing graph. But every bit of it is intentional. I'll try to break it down

The low xo point is simply a byproduct of prioritizing power response, together with my doubts on the mid-high capabilities of the nasty Dynavox woofer. The SB tweeter can, surprisingly take a low XO point, very low for a ring dome, at least as far as harmonic distortion goes. It was never played too loudly so that helped too.

The high frequency lift is entirely a function of the narrowing top end of the SB29 (and all ring domes). Certainly not a show trick to turn heads. This worked well, especially given the thin carpeting of the venue and humans absorbing the upper end of the treble spectrum. It would have been exceedingly dark sounding otherwise.

Finally, the massive 2.5k dip. I'd much rather it was not there. But the alternative is to have a tremendous bloom in the off-axis right in that region where hearing is most painfully sensitive (as the opera track demonstrated). Off axis is talked about a lot these days and I think it's importance is often.. misstated. It's situational, but here it simply cannot be ignored. So the price to be paid is in the on-axis 2.5k dip.

The little blip in the 5.2k area is not a design feature. I don't want it there, but not enough to actually notch it out.

It's been a cool project for me, coming from an exclusively DSP background with most designs soffit-mounted, so diffraction energy is never a problem. I was quite shocked how much energy is radiated from the baffle edge in the 2-3k region. I was equally surprised at the exceeding narrow top end of the SB29 tweeter. All the HF coming from the dome tip is effectively plugged and beaming becomes real problem..

Welcome to the forum, EQ! It was good talking to you about your xover design and background designing in-wall type speakers. Congrats again, on your winning design. I just finished entering your xover into the ViituixCAD spinorama model and get a very good match to your on-axis FR and filter curves. As you note above, impedance is bit on the low side.

1- Pink Noise; for level setting.

2- Jeff Beal - Counting Meters; from the Monk Season 1 soundtrack

3- Jan & Dean - Drag City; Engine revving and take off intro only

4- Wild Cherry - Play That Funky Music

5- Oasis - D'You Know What I Mean; Airplane flyby intro only

6- Emmylou Harris - Deeper Well; album version

7- Crickets sound; Recorded by Eric DeYoung ~2012, 10 seconds.

8- Chris Stapleton - Whiskey and You; sparse cut, lots of space.

9- Medium Traffic; Excelsior Ultimate Sound Effects, 10 seconds.

10- The Pretty Reckless - 25; She sings in 3-4 different ranges over the course of the song. The rest of the album is NSFW, so be aware.

11- Rain sounds; Recorded by Eric DeYoung ~2012, 10 seconds.

12- Yanni - The Storm; Cello intro only.

13- Stream Babbling - Excelsior Ultimate Sound Effects, 10 seconds.

14- Boxenkiller

I'm starting to export overlay type comparison graphs from VituixCAD. But I will need to limit the number of overlays on each graph for clarity, otherwise the graph becomes too busy. For instance, here is the Predicted In-Room (PIR) FR overlay of all crossovers on a single graph:

But here is the same graph with only the top 3 contestants. Much easier to compare the curves. EQ's xover has a very smooth downward sloping PIR curve, which also resulted in the highest VituixCAD preference score rating.

@Tom_S said:

Glad to see I'm not the only one here who likes The Pretty Reckless. My wife actually turned me on to them.

I heard the song on the radio at work, and thought it'd work very well for something like this. Who knew Cindy-Lou Who could grow up and sing like that? For the most part, I don't care for the 'fiery' part of their content, if you catch my drift. Other than that- she can sing and the group has a solid sound. Just don't open the liner notes of this particular album if you prefer not to observe nudity.

Here are few more PIR comparison graphs, limited to 3 curves at a time for clarity. I have used EQ's smoothly sloping PIR curve as an anchor in each graph. This way, you can clearly see how your personal curve compares to the winning curve.

@tajanes said:

EQ smoothest 1 to 5g (highest ear sensitivity range) and maybe something to the slight BBC dip, and a bit of high-end finish - detail?

Sorry I couldn’t attend, very cool contest idea !

I have read this in a number of places, that a BBC dip is intentionally used to balance out the sound power in this critical frequency range. The argument is that the directivity mismatch between a large woofer cone and a small tweeter dome often creates a power response blooming in the 1.5-4.5kHz area. The BBC dip is used to restore the balance.

I've attached a complete zipped up set of comparison curves for on-axis FR, listening window FR, Predicted In-Room response (PIR), and power response. Each curve is a small 19k .txt file with columns for frequency, magnitude, and phase. If you are interested, simply download the attached file, unzip it, and load the selected files into OmniMic, VituixCAD, PCD, Arta, SE, etc., to create your own custom overlay comparison graphs.

@tktran said:

Another neat trick is to see how well the Preference Score correlates with the ranking of ALL the tested speakers.

Are we talking about a 0.86 correlation?

That would be a neat trick, indeed! I have a spreadsheet put together showing the various rankings. However, I hesitate to post it because these preference ranking equations were developed with the listeners sitting at a fixed distance comparing mono loudspeakers. For the event, listeners were spread out all over the room, listening to a pair of speakers in stereo. The tonal balance shifts from bright to dark as you move further back in a room. If you get up and move around during the event, testing the sound in different rows and chairs, you will notice a huge difference in stereo imaging as well as tonal balance.

Also, there are several versions of the preference ranking equations in the VituixCAD program. Which one do I use? The equations were simplified in the latest version of the program and produce different rankings compared to the previous version. So my spreadsheet can have several ranking sorts, depending on how I decide to set it up. Very difficult to understand how this system works.

The caveat with this whole rating system is it is based on amplitude response only, no other aspects of the speaker are considered, so you can very well have a speaker with good preference rating that still sound like crap.

Erin (Erin's Audio Corner) recently interviewed Sean Olive regarding the preference rating system. I've watched the video a couple times and I "think" I am beginning to understand some of the concepts. https://www.youtube.com/watch?app=desktop&v=IEtYH03pfOI

I'm finding this one of the most interesting threads on speaker design that I've read in a long time, thanks everyone.

It's helped me understand VituixCad much better. Also, can someone clarify for me which of these crossover entries were tested (voiced) with an actual speaker before the competition and which were not, and how did they "place" in the rankings? E.g., subjectively, were the crossovers that were not voiced in general all "acceptable" for a final design? Finally, I wonder if tweaking the "top" crossover entries to increase the preference rating would actual result in a better sounding speaker, or just slightly different?

@rstillin said:

Also, can someone clarify for me which of these crossover entries were tested (voiced) with an actual speaker before the competition and which were not, and how did they "place" in the rankings?

Per Wolf's post on May 8th...Wolf and Jack were the only two who voiced their crossover. The top 3 were EQ (winner), Wolf and Jack.

I voiced mine over a couple month period. The tweeter Fs comp and 0.5 ohm R were the last 2 things I changed. I was the only person to triple notch the woofer and one of 2 entries to comp the Fs.

I really liked the sound when I was done.

Did EQ voice it? Or did he used VituixCAD exclusively?

Because I understand the science behind Kimmo's recommendations of taking real 360 degree measurements and doing the crossover from there... then I voice for my room

OTOH, there may be some advantages for NOT voicing it, because you don't know the room you're going to demo-ing in... get the preference score "right" eg. 7.x; get the phase "right" (phase coherent @ crossover points, or linear phase) get the group delay "right", get the sensitivity and max SPL right, get the non-linear distortion "right", get the looks "right"

Maybe you have a winner winner chicken dinner; and only need less time fine tuning with my dodgy ears in my dodgy room.

Beats measuring just on axis/power response and going back and forth and back and forth and doing 8 different crossovers over 3 months (my previous technique)

@tktran said:

Did EQ voice it? Or did he used VituixCAD exclusively?

Because I understand the science behind Kimmo's recommendations of taking real 360 degree measurements and doing the crossover from there... then I voice for my room

OTOH, there may be some advantages for NOT voicing it, because you don't know the room you're going to demo-ing in... get the preference score "right" eg. 7.x; get the phase "right" (phase coherent @ crossover points, or linear phase) get the group delay "right", get the sensitivity and max SPL right, get the non-linear distortion "right", get the looks "right"

Maybe you have a winner winner chicken dinner; and only need less time fine tuning with my dodgy ears in my dodgy room.

Beats measuring just on axis/power response and going back and forth and back and forth and doing 8 different crossovers over 3 months (my previous technique)

No. EQ did not voice his xover or use VituixCAD to design it. Not sure what software package he used, but I know it was not VituixCAD. He finished up the soldering process in the adjoining room just moments before the competition began, borrowing parts and wire from Meredith C. as needed. Dcibel and I used the full spinorama data set in VituixCAD to design our xovers. There may have been others that used it as well. But I think most designers used either XSim or PCD with the on-axis for offset data set.

Those graphs look very familiar, I thought they were from VituixCAD2? What did you use to model your crossover?

The blue/green/red look good, the pink (reference axis) not so much, but I tend to not focus on that.

In fact, when tuning my crossover I look at the listening window and power response and predicted in-room response, I use the amplitude response simultaneously with my phase response.

In years gone by I only focussed on my amplitude and phase response.

I'm sure we'd all love to hear your process and learn more.

Actually I used Vituix too, but I haven't updated it since 2017.. so I think the esthetics/schema layout differs significantly with V2 today so much so that it is hardly recognizable :-)

On the matter of power response & diffraction- been used to seeing perfect off axis responses because I usually work with waveguides and felt and quasi infinite baffles (soffit/flush mount). All this disastrous off-axis peaks and dips we've been talking about is solely a function of diffraction (mostly baffle diffraction). It is a huge thing which deserves a lot more attention in research and blind testing. Because the effects of which is hugely audible AND not necessarily negative, subjectively.

For e.g., one of the projects (can't remember the name) featured a TC9 / Ribbon combo with an SAE felt treated baffle (meaning minimal diffraction). That was an immediately familiar presentation that I could relate to; the soundstage was dramatically different from all the other speakers on demo, but I could not figure out which one sounded better to me.. it just sounded.. different.

I would also suggest, that for purposes of this contest, where listening time is limited and source material (music) is unfamiliar to most, HF balance is much more important than getting BSC right. It is almost as if we could invent a new compensation metric- the High frequency compensation (HFC...) because in a large, non ideal acoustic space such as Hampton Inn, the tweeter radiation properties, together with the room furnishing and other factors, requires some deviation from a flat axial curve and more attention to the overall treble 'tilt' eg the Spinorama predicted in room curve. It is however a lot more nuanced than simply adding or taking away a few ohms from the HF pad. And to that end, an in depth ABX test would definitely be fruitful.

In my opinion, Indiyana and any other gathering for the matter, does not strictly evaluate design idealism in crossover sophistication but rather holistic.. design pragmatism if you will, because the room and other random variables (such as wildly differing listening positions), will have a huge impact to the overall showing of a contestant. It doesn't mean that the loudspeaker has to be designed for the contest specifically, merely that the listening conditions favor some design qualities more than others.

as a footnote I'd add that the aforesaid diffraction artefacts is nothing of SBIR, comb filtering and the likes of which. Ironically those are talked about a lot more often than edge diffraction and easily conflated together

Comments

Here's mine.. Ben aptly pointed out that the pad is eating a good bit (a ton) of unnecessary power. And in my defense this is one of the first passives I've built.. but something to take note if anyone ever tries to replicate this design. Try shifting the shunt resistor back a cap or two; I didn't have time to play around with it ever since I got back. But it can surely be done while retaining the general voicing, and preventing a catastrophic meltdown.

Here's the response curves- acoustical Xover point is squarely 1.5khz, LR4 both ways. Electrically the tweeter is a textbook LR4, woofer is simply.. a parallel notch (series w/ tweeter). The Le is so high I don't really need anything else up there.

It's a fairly scandalizing graph. But every bit of it is intentional. I'll try to break it down

The low xo point is simply a byproduct of prioritizing power response, together with my doubts on the mid-high capabilities of the nasty Dynavox woofer. The SB tweeter can, surprisingly take a low XO point, very low for a ring dome, at least as far as harmonic distortion goes. It was never played too loudly so that helped too.

The high frequency lift is entirely a function of the narrowing top end of the SB29 (and all ring domes). Certainly not a show trick to turn heads. This worked well, especially given the thin carpeting of the venue and humans absorbing the upper end of the treble spectrum. It would have been exceedingly dark sounding otherwise.

Finally, the massive 2.5k dip. I'd much rather it was not there. But the alternative is to have a tremendous bloom in the off-axis right in that region where hearing is most painfully sensitive (as the opera track demonstrated). Off axis is talked about a lot these days and I think it's importance is often.. misstated. It's situational, but here it simply cannot be ignored. So the price to be paid is in the on-axis 2.5k dip.

The little blip in the 5.2k area is not a design feature. I don't want it there, but not enough to actually notch it out.

It's been a cool project for me, coming from an exclusively DSP background with most designs soffit-mounted, so diffraction energy is never a problem. I was quite shocked how much energy is radiated from the baffle edge in the 2-3k region. I was equally surprised at the exceeding narrow top end of the SB29 tweeter. All the HF coming from the dome tip is effectively plugged and beaming becomes real problem..

Welcome to the forum, EQ! It was good talking to you about your xover design and background designing in-wall type speakers. Congrats again, on your winning design. I just finished entering your xover into the ViituixCAD spinorama model and get a very good match to your on-axis FR and filter curves. As you note above, impedance is bit on the low side.

Thanks EQ for posting the detail above, and congratulations on your big win!

The collection is now complete. Full set of comparison data is attached.

Realized I didn't post the house tracks...

1- Pink Noise; for level setting.

2- Jeff Beal - Counting Meters; from the Monk Season 1 soundtrack

3- Jan & Dean - Drag City; Engine revving and take off intro only

4- Wild Cherry - Play That Funky Music

5- Oasis - D'You Know What I Mean; Airplane flyby intro only

6- Emmylou Harris - Deeper Well; album version

7- Crickets sound; Recorded by Eric DeYoung ~2012, 10 seconds.

8- Chris Stapleton - Whiskey and You; sparse cut, lots of space.

9- Medium Traffic; Excelsior Ultimate Sound Effects, 10 seconds.

10- The Pretty Reckless - 25; She sings in 3-4 different ranges over the course of the song. The rest of the album is NSFW, so be aware.

11- Rain sounds; Recorded by Eric DeYoung ~2012, 10 seconds.

12- Yanni - The Storm; Cello intro only.

13- Stream Babbling - Excelsior Ultimate Sound Effects, 10 seconds.

14- Boxenkiller

InDIYana Event Website

Glad to see I'm not the only one here who likes The Pretty Reckless. My wife actually turned me on to them.

I'm starting to export overlay type comparison graphs from VituixCAD. But I will need to limit the number of overlays on each graph for clarity, otherwise the graph becomes too busy. For instance, here is the Predicted In-Room (PIR) FR overlay of all crossovers on a single graph:

But here is the same graph with only the top 3 contestants. Much easier to compare the curves. EQ's xover has a very smooth downward sloping PIR curve, which also resulted in the highest VituixCAD preference score rating.

I heard the song on the radio at work, and thought it'd work very well for something like this. Who knew Cindy-Lou Who could grow up and sing like that? For the most part, I don't care for the 'fiery' part of their content, if you catch my drift. Other than that- she can sing and the group has a solid sound. Just don't open the liner notes of this particular album if you prefer not to observe nudity.

InDIYana Event Website

Wow- the PIR for mine and EQ's is a virtual overlay barring the 1.8k dip and above 15k, save a dB here and there. That's pretty remarkable!

InDIYana Event Website

EQ smoothest 1 to 5g (highest ear sensitivity range) and maybe something to the slight BBC dip, and a bit of high-end finish - detail?

Sorry I couldn’t attend, very cool contest idea !

Are the house tracks available for download anywhere?

Ron

I have not uploaded it anywhere.

InDIYana Event Website

The Pretty Reckless track was my least favorite on the house track just behind track 3 (man that motor sure sounded lazy!).

Here are few more PIR comparison graphs, limited to 3 curves at a time for clarity. I have used EQ's smoothly sloping PIR curve as an anchor in each graph. This way, you can clearly see how your personal curve compares to the winning curve.

I have read this in a number of places, that a BBC dip is intentionally used to balance out the sound power in this critical frequency range. The argument is that the directivity mismatch between a large woofer cone and a small tweeter dome often creates a power response blooming in the 1.5-4.5kHz area. The BBC dip is used to restore the balance.

I've attached a complete zipped up set of comparison curves for on-axis FR, listening window FR, Predicted In-Room response (PIR), and power response. Each curve is a small 19k .txt file with columns for frequency, magnitude, and phase. If you are interested, simply download the attached file, unzip it, and load the selected files into OmniMic, VituixCAD, PCD, Arta, SE, etc., to create your own custom overlay comparison graphs.

Another neat trick is to see how well the Preference Score correlates with the ranking of ALL the tested speakers.

Are we talking about a 0.86 correlation?

That would be a neat trick, indeed! I have a spreadsheet put together showing the various rankings. However, I hesitate to post it because these preference ranking equations were developed with the listeners sitting at a fixed distance comparing mono loudspeakers. For the event, listeners were spread out all over the room, listening to a pair of speakers in stereo. The tonal balance shifts from bright to dark as you move further back in a room. If you get up and move around during the event, testing the sound in different rows and chairs, you will notice a huge difference in stereo imaging as well as tonal balance.

Also, there are several versions of the preference ranking equations in the VituixCAD program. Which one do I use? The equations were simplified in the latest version of the program and produce different rankings compared to the previous version. So my spreadsheet can have several ranking sorts, depending on how I decide to set it up. Very difficult to understand how this system works.

The "preference rating" is based on a patent application by Sean Olive, the original paper is available here:

https://patentimages.storage.googleapis.com/95/03/0a/a4a8dbd7d8042c/US20050195982A1.pdf

The caveat with this whole rating system is it is based on amplitude response only, no other aspects of the speaker are considered, so you can very well have a speaker with good preference rating that still sound like crap.

Erin (Erin's Audio Corner) recently interviewed Sean Olive regarding the preference rating system. I've watched the video a couple times and I "think" I am beginning to understand some of the concepts.

https://www.youtube.com/watch?app=desktop&v=IEtYH03pfOI

I'm finding this one of the most interesting threads on speaker design that I've read in a long time, thanks everyone.

It's helped me understand VituixCad much better. Also, can someone clarify for me which of these crossover entries were tested (voiced) with an actual speaker before the competition and which were not, and how did they "place" in the rankings? E.g., subjectively, were the crossovers that were not voiced in general all "acceptable" for a final design? Finally, I wonder if tweaking the "top" crossover entries to increase the preference rating would actual result in a better sounding speaker, or just slightly different?

Per Wolf's post on May 8th...Wolf and Jack were the only two who voiced their crossover. The top 3 were EQ (winner), Wolf and Jack.

I voiced mine over a couple month period. The tweeter Fs comp and 0.5 ohm R were the last 2 things I changed. I was the only person to triple notch the woofer and one of 2 entries to comp the Fs.

I really liked the sound when I was done.

InDIYana Event Website

Did EQ voice it? Or did he used VituixCAD exclusively?

Because I understand the science behind Kimmo's recommendations of taking real 360 degree measurements and doing the crossover from there... then I voice for my room

OTOH, there may be some advantages for NOT voicing it, because you don't know the room you're going to demo-ing in... get the preference score "right" eg. 7.x; get the phase "right" (phase coherent @ crossover points, or linear phase) get the group delay "right", get the sensitivity and max SPL right, get the non-linear distortion "right", get the looks "right"

Maybe you have a winner winner chicken dinner; and only need less time fine tuning with my dodgy ears in my dodgy room.

Beats measuring just on axis/power response and going back and forth and back and forth and doing 8 different crossovers over 3 months (my previous technique)

Nope, he was simulation only, and used another program.

InDIYana Event Website

No. EQ did not voice his xover or use VituixCAD to design it. Not sure what software package he used, but I know it was not VituixCAD. He finished up the soldering process in the adjoining room just moments before the competition began, borrowing parts and wire from Meredith C. as needed. Dcibel and I used the full spinorama data set in VituixCAD to design our xovers. There may have been others that used it as well. But I think most designers used either XSim or PCD with the on-axis for offset data set.

I used PCD for offset, then had to use Xsim for the required triple-nocthing woofer circuit.

InDIYana Event Website

Did anyone else in the competition use VituixCAD or the full spinorama data set?

@EQ_

Those graphs look very familiar, I thought they were from VituixCAD2? What did you use to model your crossover?

The blue/green/red look good, the pink (reference axis) not so much, but I tend to not focus on that.

In fact, when tuning my crossover I look at the listening window and power response and predicted in-room response, I use the amplitude response simultaneously with my phase response.

In years gone by I only focussed on my amplitude and phase response.

I'm sure we'd all love to hear your process and learn more.

Actually I used Vituix too, but I haven't updated it since 2017.. so I think the esthetics/schema layout differs significantly with V2 today so much so that it is hardly recognizable :-)

On the matter of power response & diffraction- been used to seeing perfect off axis responses because I usually work with waveguides and felt and quasi infinite baffles (soffit/flush mount). All this disastrous off-axis peaks and dips we've been talking about is solely a function of diffraction (mostly baffle diffraction). It is a huge thing which deserves a lot more attention in research and blind testing. Because the effects of which is hugely audible AND not necessarily negative, subjectively.

For e.g., one of the projects (can't remember the name) featured a TC9 / Ribbon combo with an SAE felt treated baffle (meaning minimal diffraction). That was an immediately familiar presentation that I could relate to; the soundstage was dramatically different from all the other speakers on demo, but I could not figure out which one sounded better to me.. it just sounded.. different.

I would also suggest, that for purposes of this contest, where listening time is limited and source material (music) is unfamiliar to most, HF balance is much more important than getting BSC right. It is almost as if we could invent a new compensation metric- the High frequency compensation (HFC...) because in a large, non ideal acoustic space such as Hampton Inn, the tweeter radiation properties, together with the room furnishing and other factors, requires some deviation from a flat axial curve and more attention to the overall treble 'tilt' eg the Spinorama predicted in room curve. It is however a lot more nuanced than simply adding or taking away a few ohms from the HF pad. And to that end, an in depth ABX test would definitely be fruitful.

In my opinion, Indiyana and any other gathering for the matter, does not strictly evaluate design idealism in crossover sophistication but rather holistic.. design pragmatism if you will, because the room and other random variables (such as wildly differing listening positions), will have a huge impact to the overall showing of a contestant. It doesn't mean that the loudspeaker has to be designed for the contest specifically, merely that the listening conditions favor some design qualities more than others.

as a footnote I'd add that the aforesaid diffraction artefacts is nothing of SBIR, comb filtering and the likes of which. Ironically those are talked about a lot more often than edge diffraction and easily conflated together